Manage Complexity in Software Development

Monty empowers your team to manage complexity, streamline planning, and gain deep insights into your codebase.

Monty's Core Capabilities

Designed to augment your team's cognitive capabilities.

Accelerate Planning

Monty builds a semantic model of your application, allowing you to check for incomplete or inconsistent requirements before building. This model then helps Monty plan your work.

Automate Workflow

Analysis is not a one-shot question. Monty iterates over your code and context database to piece together a complete response.

Manage Complexity

Monty uses network analysis to evaluate and visualize code complexity. Identify the most challenging parts of a code base and work with Monty to refactor and reduce that complexity.

Incorporate multiple resources

Monty maintains multiple local RAG (retrieval augmented generation) databases. Code, documentation, regulations, and other resources can be incorporated into your analysis.

Build Context

Monty builds analysis-specific context databases through which your analysis is completed. Code analysis and LLM responses populate a database that is fed back to the LLM as needed.

How Teams Use Monty

Real solutions to everyday development challenges.

Consistent Requirement Analysis

Ensuring requirements are clear, complete, and aligned with project goals is challenging. Monty captures and evaluates requirements in a centralized database, checking for internal consistency and identifying gaps that need stakeholder input to keep projects on track.

Comprehensive System Analysis

System-wide changes require careful consideration of dependencies and performance. Monty performs iterative analyses focused on performance, scalability, and security, using data from previous analyses and external tools to provide reliable recommendations for large-scale updates.

Improved Team Consistency

Consistent practices are essential from planning through development. Monty helps teams prepare clear, standards-aligned feature plans and tasks before work begins, ensuring cohesive code and alignment with project goals across all contributors.

Efficient Refactoring and Modernization

Modernizing legacy systems is complex and requires managing interdependent changes. Monty infers requirements for new architectures, facilitates integration with existing code, and supports iterative refactoring to streamline the modernization process.

Accelerated Onboarding

New developers face a steep learning curve with complex codebases. Monty accelerates onboarding by generating code summaries, providing team-aligned documentation, and delivering targeted code reviews to help new team members ramp up quickly.

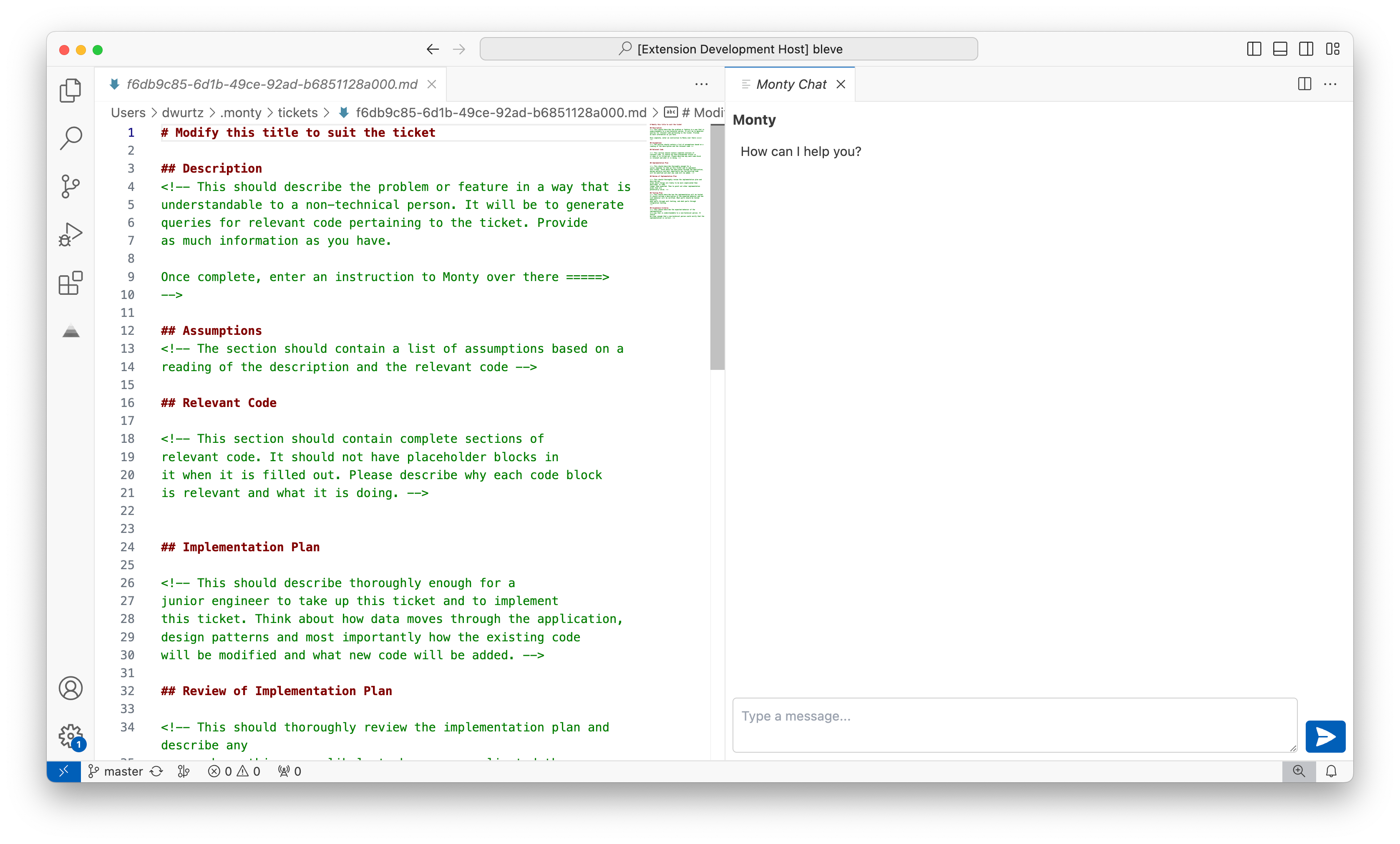

Monty: An AI-enabled assistant inside your IDE

Chat with your code

Specify context

Monty provides helpers that let you precisely specify the relevant files, functions, classes, methods, and code blocks. You can also allow Monty to infer context with RAG and analysis-specific databases.

- Definition or selection

- Add this function/class definition or highlighted text

- File

- Add this entire file

- Callers

- Add the functions and methods that call this

- Callees

- Add the functions and methods that this calls

- Neighborhood

- Add the callers and callees of this

- 2-hop neighborhood

- Add the neighbors of this and the neighbors' neighbors

Use Templates

Monty can optionally use a markdown document instead of chat history when you’re trying to produce a shareable document, ticket, or specification.

- Curate

- Ensure repeatability, efficiency, and high-quality responses from your LLM.

- Stardardize

- Minimize rework and ambiguity in your documents.

- Collaborate

- Edit collaboratively with Monty.

In this mode, Monty uses the content of your document and instruction to search for relevant code. As you update the document, Monty’s context updates as well.

...and much more

Security, privacy, flexibility

Aligned with the way your organization works.

- Privacy Friendly

- The index is built locally and never leaves your machine. Code is only seen by your LLM.

- Real-time Indexing

- Codebases are constantly changing. Monty keeps its code index up to date so its knowledge is never stale.

- Language Agnostic

- As long as your code is in text files, Monty can read it. Doesn't matter what language you use.

- No usage or context limits

- Monty can be used as often as needed, with as much context as you need. You use your own API key, so you're in control.

- LLM Agnostic

- Monty supports multiple LLMs. Currently, Monty works with OpenAI, Azure OpenAI, Anthropic, and Google AI Studio, as well as OpenAI API-compatible providers such as Groq and Ollama.

Pricing

Monty is free for personal projects

Interested in a commercial license or conversation? Contact us for details

Frequently asked questions

- What are the system requirements?

Monty requires either Windows (x86_64), Linux (x86_64), or macOS (Apple Silicon) with VSCode and Git installed.

- Which IDEs does Monty support?

At this time only VSCode is supported, though we plan to support other IDEs in the future (e.g. JetBrains).

- Does Monty work with private repositories?

Monty works with local repositories and builds local indices. The only code that leaves your system is what's sent to your LLM.

- Does Monty work with remote workspaces?

VSCode remote workspaces and WSL (Windows Subsystem for Linux) are both supported.

- Which LLMs are supported?

Monty currently supports several LLM providers, including OpenAI and OpenAI compatible providers such as Groq and Ollama, as well as Azure OpenAI, Anthropic, and Google AI Studio.

Explore More

Insights, guides, and articles to help your team succeed.

Why is software hard?

Get introduced to using a DSM to understand code complexity

Model-Based Application Modernization

Use semantic modeling as part of application modernization or new project development